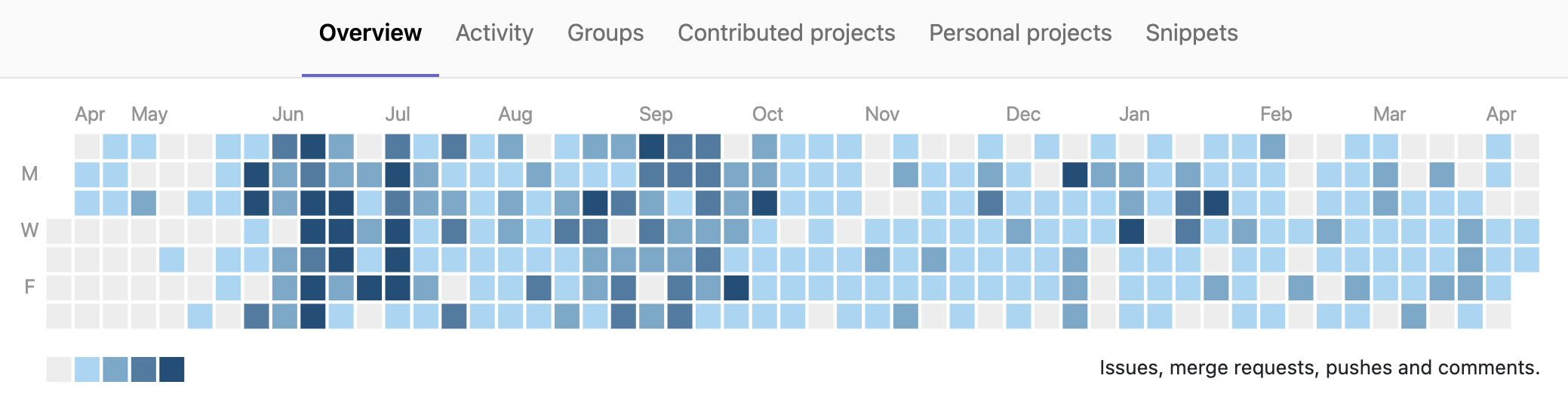

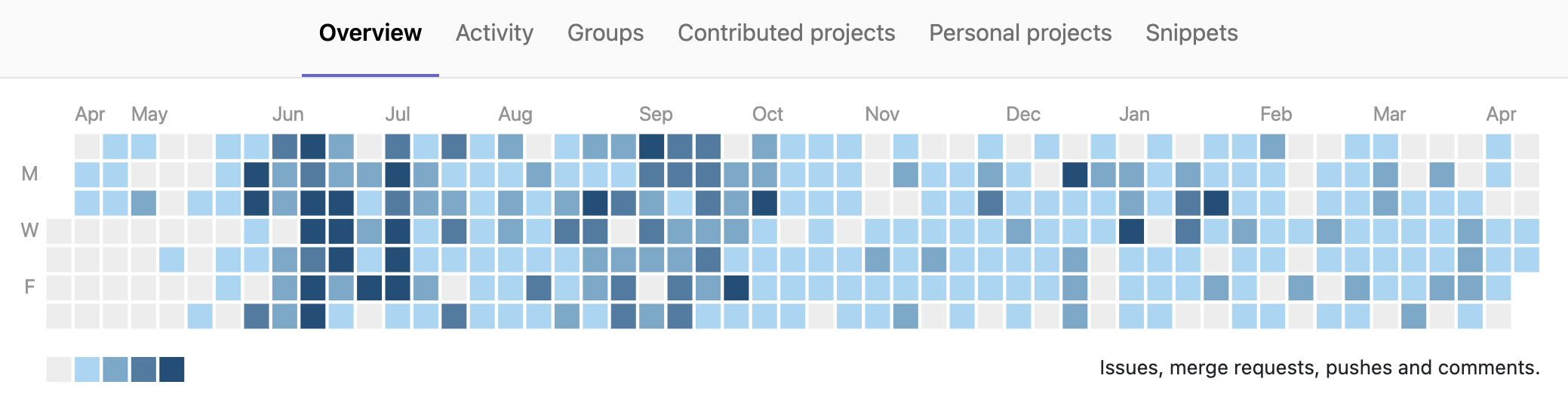

365/365 days of coding

All good things come to those who wait

TUM, I just want to say 3 words about it and about the whole city of Munich: class, professionalism and great.

“Many heads are better than one”. It is how fusion works. There are early fusion, middle fusion, and late fusion techniques. In this post, I focused on some late fusion techniques based on the score of observations.

Structured programming (SP) is a subset of object-oriented programming (OOP). Therefore, OOP can help in developing much larger and complex programs than structured programming. Traditional procedural programming is far less dogmatic. You have data. You have functions. You apply the functions to the data. If you want to organize your program somehow, that’s your problem, and the language isn’t going to help you. In my working experiences, if you want to try some models quickly, you could use SP step-by-step. But when working with a system with multiple modules, you should use OOP for Deep Learning because of its encapsulation, inheritance property. Besides that, OOP makes your Deep Learning program more brightly and concisely.

When working with a recurrent neural networks model, we usually use the last unit or some fixed units of recurrent series to predict the label of observations. It was being shown in this picture.

Spatial Pyramid Pooling (SPP) [1] is an excellent idea that does not need to resize an image before feeding to the neural network. In other words, it uses multi-level pooling to adapts multiple image’s sizes and keep the original features of them. SPP is inspired from:

Preprocess data is a big step when you do a machine learning problem. In this post, I will show a trick to import text data such as word embeddings, a dictionary of lists, which usually has a format likes:

I am used to solving a lot of Mathematical Olympiad problems, but the most rememberable problem that I love is a numerical problem. It took me three hours to solve it. Why is it beautiful.? Because it is solved by some wonderful techniques: